4: AI Fast and Furious

Well That Was Quick

A personal update: after discussions with Erik Torenberg at Turpentine Media, I have decided to launch a daily (on weekdays) free newsletter and podcast series on AI.

Self Aware Neuron will go into pause mode by mid February, and I will be refunding the remainder of all annual subscriptions.

I will continue to post occasionally here, especially for non AI content.

Motivations? I wanted to write more than a weekly newsletter would allow, as there is a lot to talk about. I also wanted to hone in on the maximum point of leverage for me right now, which is really as a kind of generalist intermediary between the research, engineering and product factions of the AI community.

I will take the liberty, of adding all of you to the new daily newsletter in mid Feburary. However, you can also follow the link Emergent Behavior to sign up immediately, as the first issue will be released tomorrow.

AI Remains Startlingly Expensive

My favorite datapoint about how expensive AI really is comes from the backstory that Meta approached OpenAI for access to a fine-tuned coding assistant in mid 2023, and Boz was told it was going to cost him 7 cents per query, which on Meta’s scale would run the organization a billion dollars a year. From the Verge last year:

Meta is talking to Microsoft and OpenAI about making an AI coding assistant for its engineers.

But the cost is “crazy” at about seven cents per query, CTO Andrew Bosworth recently told employees.

In essence, OpenAI’s inability to bring costs down fast enough forced Meta to create an internal effort to build a good language model and then open source it to collapse prices even faster.

The costs were significant enough that Microsoft essentially moved Github CoPilot into harvest mode, refusing to upgrade the GPT-3 backbone to GPT-4, which allowed the rise of upstarts such as cursor.sh, offering subscription early access to GPT-4 Turbo codegen in VS Code.

Prices remain high enough and availability low enough, that MSFT is still not able to guarantee access one year later.

51 Days to Save The World

In November, Alex Gerko, billionaire quant trader and largest taxpayer in the United Kingdom (the British feel very strongly about taxing the new wealth that competes with the old for London manors) , announced the AI-MO prize, $10 million to be awarded to the team that can build an AI that can win gold at the International Math Olympiad.

At that, OpenAI’s Noam Brown had this to say:

Wow, that's a huge prize pool

Noam of course, built the bot that solved poker, moved from Meta to OpenAI to work on planning and reasoning, and was rumored to have been involved in Q*, a reasoning engine that could solve grade school math problems. The real question is of course whether OpenAI scaled up Q* and succeeded at any difficult math challenges.

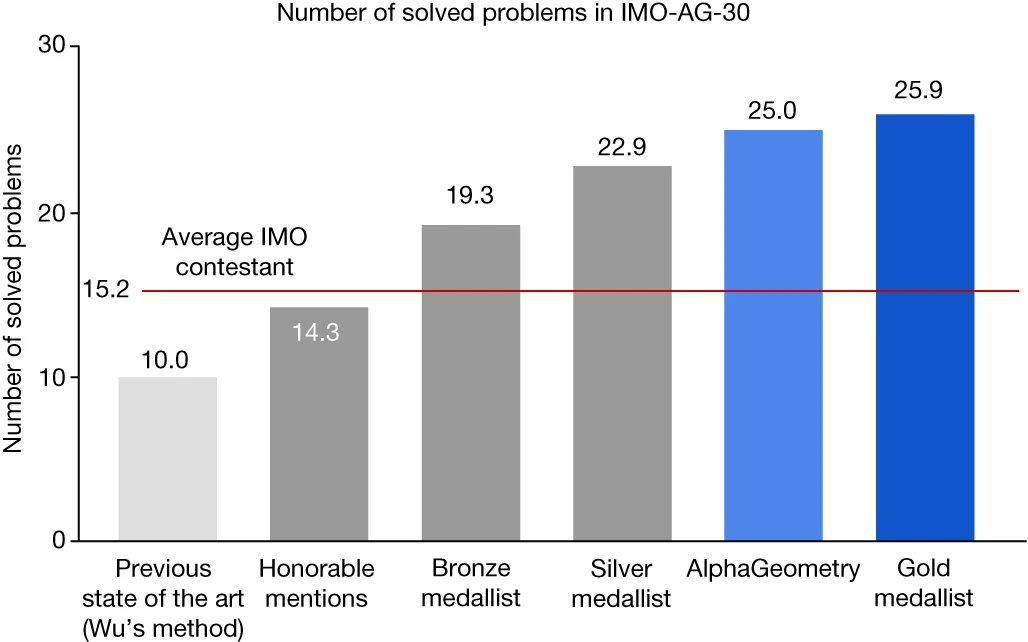

This does not seem that unlikely anymore, as just this week Google Deepmind reported AlphaGeometry, which, while solving ONLY geometry, performs just a little worse than a gold medallist at the IMO.

The AlphaGeometry build was simply a language model (the imagination piece) that thinks up new solutions, attached to a symbolic engine that checks solutions.

That’s all it took: 51 days to a partial solution.

Things Happen

Managers only panic when CoPilot for Office can prepare a complete Powerpoint presentation in 47 seconds. Of all things, this is what scares them:

This is the fourth edition of Self-Aware Neuron! I’m essentially moving to a free every-weekday schedule at Emergent Behavior this week. This weekly will continue till mid Feb. Thank you for the support!

top notch will represent just escape lucidation... good for programming and science, somewhat bad for general pratics. partial solutions are cool narrowings. ^_^